I have a confession to make.

My texts, DMs, and emails don’t always come across the way they’re intended. Something as small as a poor choice of words or an ambiguous line reading can leave the recipient thinking I’m being sarcastic or insensitive, or that my tone just isn’t appropriate for the dialog we’re having. Of course, I’m hardly alone in this—in a digital age when text-based exchanges have taken the place of so many voice calls, we’ve all had the experience of causing or taking offense where none was intended.

But I have a secret weapon that helps me make sure that my tone fits my intention. When in doubt, I turn to an editing and speech synthesis tool we’ve developed at Intuit to analyze and manipulate the tone of text-based and voice-based communication. Quite ironically, I’ve dubbed it “Mumbler.” By feeding my message into the tool, I can see at a glance whether I’m coming across as positive or negative, polite or impolite, excited, angry, frustrated, sympathetic, and so on. If I’m striking the wrong tone, I have a chance to fix it before I hit send.

And, this goes both ways. When I get a message that rubs me the wrong way, I’ll sometimes feed it into Mumbler to see whether I’m being overly sensitive. More often than not, I’ll get validation that I was right. In addition, Mumbler gives me a screen shot to include in my response (to let the person know that they could have been nicer!).

Admittedly, this is a somewhat whimsical application. An analyzer like Mumbler isn’t just a clever gadget to use around the office, but a powerful tool for tackling a top challenge for creators of today’s AI-powered conversational interfaces: how to provide customer experiences that are not only accurate, but likeable. And, how to do so simply and effortlessly, with minimal visual cues.

By making sure that only the intended sentiment is conveyed, we can build deeper empathy, relevance, and meaning into experiences we provide for our customers.

Moving customers—and markets—with likable interactions

It would be easy to dismiss sentiment as mere window-dressing. After all, what matters is the substance of our communications, not their subjective wrapping, right? Not so fast.

How would you feel about a cheery, upbeat note that your home loan application has been denied, or a stern lecture that you’ve overspent on your small business budget? What about a piece of good news that’s presented in such affectless way that it feels like a letdown? Would that make you more or less likely to keep working with that vendor?

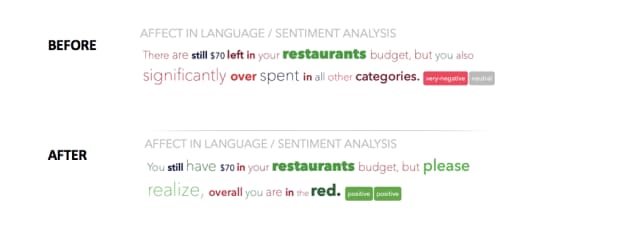

Following is a “before” and “after” CUI example, using Mumbler to illustrate how one simple sentence can be written to evoke an entirely different sentiment with a user of a financial management app: “You still have $70 in your restaurants’ budget, but please realize, overall you are in the red.”

Text-based Chatbot Example

It’s important not to underestimate the impact of likability on adoption and customer satisfaction. That’s especially true in a voice user interface, where visual experience and branding are diminished or entirely absent, but it applies for text-based channels like chatbots, as you can see in the example above. The substantive accuracy of an interaction should go without saying—customers should be able to take this for granted.

What differentiates one CUI from another is its impact on a customer’s emotions. Are they coming away feeling understood and supported by a company that “gets it”? Or, has the exchange been marred by false notes that destroy any sense of empathy? As Intuit’s chairman Brad Smith always preaches (a la Maya Angelou), people will remember you not for what you did for them, but how you made them feel.

Consider the market for voice assistants in the home. Google would seem to have the inside track, given its broader knowledge base and integrated data from customers’ apps for calendaring, maps, and messaging—but in the U.S., Google Home products lag behind Amazon Alexa (Source: Smart Speaker Consumer Adoption Report, March 2019). Both products are used primarily for simple tasks: setting an alarm, checking the weather, playing a song, etc. Both offer similar accuracy and responsiveness.

So why would one outsell the other by more than two-to-one? I’d argue that, to me, Alexa sounds more natural and likable. I’m not suggesting that a majority of consumers extensively evaluated and compared these platforms before buying. However, I’d also argue that one is slightly more capable, while the other might be slightly more likable.

Intuit is one of many companies focused on likeability. At industry conferences, chatbot discussions turn again and again to sentiment. In the past, oftentimes it’s been developers or engineers tasked with writing CUI scripts—almost as an afterthought—once the technical work has been done. I have to admit, I’ve been guilty of that myself.

Clearly, a more thoughtful approach is needed. That’s where an analyzer like Mumbler comes in, giving us a way to make sure we’re conveying the sentiment we intend. To reinforce that sentiment here at Intuit, each quarter we bring everyone together who works on/or has an interest in CUI to share and learn best practices for creating the best customer experiences.

I’ll have much more to say about CUI, Mumbler, and the nuts-and-bolts of likeability in upcoming blogs. It’s a fascinating area—and one that will play an increasingly central role in customer relationships for Intuit and across our industry.

In the meantime, you can give Mumbler a try for yourself.